The reason being is that this approach will dump the whole DynamoDB row on a single line and we’ll import it into Redshift.

The benefit though is that it gives you a little more flexibility and means you can be truly “schema-less” in your DynamoDB approach. This option is a little more involved and will require more upfront development. Note that the export will be in JSON format so you may need to provide a JSON paths file to help with the load to Redshift.Īs you can see, this option will allow any Dynamo column types to be exported to a flat file and ingested into Redshift however you like.

You can obviously extend this pipeline to then copy that S3 data into Redshift. What this does is create a pipeline that spins up an EMR cluster to run a Map Reduce job that processes data from DynamoDB and puts it into a file format to be dumped into S3. You will see in the templates drop down that there is a template preconfigured to export DynamoDB data to S3. Simply go into Data Pipeline within the AWS console and create a new pipeline. This is a supported option with an out of the box Data Pipeline that has been preconfigured for you. Let’s look at some other options… DynamoDB to S3 Export using Data Pipeline So what happens if we want to take the preferred approach from section 1 and use a DynamoDB map to store our JSON payloads? The Map datatype isn’t supported and therefore can’t be loaded using this approach. If a COPY command tries to load an attribute with an unsupported data type, the command will fail. The Amazon DynamoDB BINARY and SET data types are not supported. Only Amazon DynamoDB attributes with scalar STRING and NUMBER data types are supported.

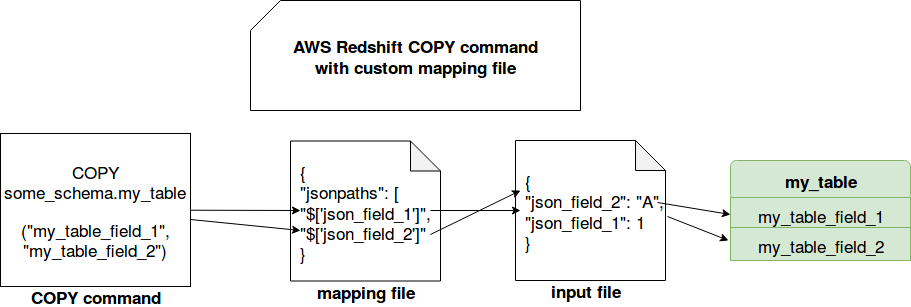

However there are drawbacks with this method that will prevent you from loading complex data types. You simply need to create a table in Redshift that matches that of your DynamoDB table and simply run a Copy command that looks as follows:ĭata from the ProductCatalog DynamoDB table will be copied into the Redshift table called favoritemovies. This is the simplest way to get data into Redshift from DynamoDB or S3.

#Redshift json how to#

I’ll also outline how to deal with this scenario. You have a few data storage options when doing this: storing the whole payload as a string or storing it within a DynamoDB Map.Įach have their pros and cons which I’ll discuss below and one approach in particular affects how we consume this data in Redshift. It’s fairly common practice to store JSON payloads in DynamoDB.

0 kommentar(er)

0 kommentar(er)